Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Edge-Cloud Continuum: Integrating Edge Computing and Cloud Computing for IOT Applications

Authors: Dr. Diwakar Ramanuj Tripathi, Harish Tikam Deshlahare , Roshan Ramdas Markhande

DOI Link: https://doi.org/10.22214/ijraset.2024.64517

Certificate: View Certificate

Abstract

This research will use a mixed-method approach with qualitative understanding and the quantitative analysis of data to examine how edge and cloud computing blend into the frame of an edge-cloud continuum for IoT applications. It adopts an exploratory and descriptive approach to research in carrying out a holistic assessment of the edge-cloud continuum in efficiency, scalability, and performance. The data required for collection was obtained through simulations using the IoTSim-Osmosis framework and also through the use of other case studies and literature published elsewhere before preparing this document. The data is usual to what has been used in several IoT deployments, including smart cities, the health sector, and industrial automation. Based on those KPIs - latency, 70.83%; bandwidth utilization, 40% reduced; energy consumption, 25% reduced; and task completion rate improved by 18.75% - the edge-cloud continuum significantly outperforms traditional cloud-only systems. Especially a very big reduction in latency is significantly important for real-time applications as it would drive the potential ability to improve responsiveness in those applications, like autonomous cars and smart healthcare. The current study demonstrates that the edge-cloud continuum can efficiently enhance resource allocation and enhance the effectiveness of complex IoT systems. This has wide implications for designing and implementing IoT solutions across industry domains.

Introduction

I. INTRODUCTION

The IoT technologies have changed the digital landscape and produced massive amounts of data and an unprecedented growth in devices connected together. The high requirement for intelligent decision-making, real-time processing, and the exponential growth in the volume of data pose significant challenges for the traditional infrastructures of cloud computing. Although offering highly scalable resources and strong data processing capabilities, cloud computing suffers from problems of latency due to its decentralized architecture, mainly for those applications requiring fast responses, such as industrial automation, smart healthcare, and autonomous driving. Hence, edge computing is a competitive paradigm that enhances real-time analytics by bringing data closer to the sources of processing with reduced latency. However, when linked to cloud and edge environments in an effective and seamless manner, then the full potential of IoT applications is actually achievable. Edge-cloud continuum is applicable at this juncture.

The edge-cloud continuum represents an integrated approach that complements the benefits of each form of computing-the cloud and the edge-to process and handle data in multiple IoT-related scenarios within a uniform and adaptive framework. With such an approach, data processing is spread across various resources, from the proximity of edge servers to centralized cloud data centers, or even IoT devices on the far edge. In fact, the ease of interaction among these levels allows intelligent data division and processing that ensures applications can satisfy the rigid requirements on latency, bandwidth, and resource efficiency. The edge-cloud continuum takes advantage of cloud and edge paradigms to achieve greater flexibility and agility in the management of data to fulfill the needs of modern dynamic and heterogeneous IoT ecosystems.

Probably, the most important advantages the edge-cloud continuum offers are related to providing a more scalable, secure infrastructure for Internet of Things deployments. If IoT devices have a rapid growth rate, then their applications will have different needs that are too much to handle for traditional cloud-centric systems, thereby creating bottlenecks and lowering service quality. The edge-cloud continuum defines, through contextual factors including network conditions, device capability, and application needs, how to divide computing tasks between the edge and the cloud.

This way, most critical tasks are executed closer to the source of data, while less resource-intensive operations are used in the cloud. All of these reduce latency, maximize bandwidth utilization, and improve the performance of the whole system.

II. REVIEW OF LITREATURE

Al-Dulaimy et al. (2024) Their research traces the evolution of the continuum from the traditional cloud-based solutions to the modern IoT-enabled architectures and provides an overview to the reader of the computing continuum while providing insights into how an IoT, edge, and cloud will determine the latency, bandwidth, and resource management challenges for scalable and flexible frameworks for diverse IoT applications. They also emphasize the need for developing adaptive algorithms that are able to distribute the computational tasks across various nodes within the continuum based on real-time network conditions and application requirements.

Complementing this perspective, Alwasel et al. (2021) They dive into the pragmatic aspects of simulating and modeling IoT applications on an edge-cloud continuum. They design IoTSim-Osmosis, a framework for simulating complex IoT scenarios that cut across multiple edge and cloud layers. Using such a tool, researchers can then analyze how different kinds of impactsinclude data partitioning strategies, network constraints, and resource allocation techniques-on the performance and efficiency of IoT deployments. The novelty of this work is in its capability to conduct controlled tests and validations of edge-cloud integration strategies, therefore positioning it at the disposal of researchers and developers who wish to refine the large-scale deployment of IoT systems.

In another study, Balouek-Thomert et al. (2019) Focusing on Edge-Cloud Integration for Data-Intensive Workflows in HPC Settings. Their paper traces the blueprint for orchestrating data and tasks over heterogeneous infrastructures with real-time data generation at the edge and complex analytics in the cloud. They introduce a multi-layered architecture that supports dynamic resource management and contextual, task-aware placements so that data is always processed at the most opportune location within the continuum.

Belcastro et al. (2023) Investigate how the concept of the edge-cloud continuum can be applied to the prediction and planning of urban mobility. Their work focuses on designing an approach that is based not only on edge resources but also on cloud resources in order to be able to support real-time traffic management as well as long-term planning in urban mobility. These authors propose a new architecture based on the strengths of both edge and cloud layers. Low-latency processing of real-time data coming from sensors and IoT devices is done on the edge, whereas traffic pattern analysis and predictive modeling as computationally intensive tasks are on the cloud. Therefore, the dual-layer approach leads to system responses that are much more responsive and scalable, allowing informed decisions on the part of city planners with respect to short-term immediate traffic conditions as well as long-run trends.

III. RESEARCH METHODOLOGY

This research approach adopted in the study is mainly oriented towards the efficiency and implementation strategies of the edge-cloud continuum for applications relating to the Internet of Things. Mixed-methods analysis entails findings from qualitative investigation combined with analysis of numerical data, which, according to the approach used, is taken to provide an in-depth appreciation of integrating edge and cloud computing. The various research phases include identification of the key parameters and data accumulation from relevant IoT deployments, subsequent analysis of system performance, and scalability and efficiency of the edge-cloud continuum.

A. Research Design

Considering the multidimensional and complex nature of operational dynamics of edge and cloud computing integration, the exploratory and descriptive research design is resorted to. The research design of this study intends to provide a complete understanding of benefits and challenges related to the adoption of an edge-cloud continuum for Internet of Things applications. The above is achieved through this study combining experimental designs with secondary data analysis from previously published works as well as real-life case studies. Modelling tools and frameworks such as IoTSim-Osmosis are used to simulate the IoT environments, thereby collecting experimental data in testing the system's performance under different scenarios.

B. Data Collection

This research exploits simulations and experiments conducted within a controlled IoT environment to obtain significant data using the IoTSim-Osmosis framework.

Different cloud and edge setups are modeled in various scenarios with a focus on data transfer rates, latency, bandwidth, and resource utilization. Secondary data is also retrieved from already published research articles, industry reports, and case studies, which complement the results obtained during the experimental phase.

C. Sample and Sampling Technique

The study chooses a representative sample of IoT deployments from different industries like smart cities, healthcare, and industrial automation. A purposeful sampling method identifies the IoT applications that have complicated data flows and high dependency on real-time data processing, thereby qualifying them for the evaluation of edge-cloud continuum models. This ensures the sample encompasses a wide spectrum of use cases, thereby generalizing the results to a wider extent.

D. Data Analysis

Using a mix of statistical and analytical techniques, data analysis is carried out to assess the performance of the continuum edge-cloud. Key performance indicators such as latency, energy efficiency, resource utilization, and scalability are studied both using simulation and actual data. To interpret data, analytical tools consisting of statistical software and data visualization platforms are utilized, followed by displaying results in a tabular and graphical format.

IV. DATA ANALYSIS AND RESULT

Table 1: Comparing the Performance of Cloud-only and Edge-Cloud Continuum Systems

|

Metric |

Cloud-only Systems |

Edge-Cloud Continuum |

% Improvement |

|

Average Latency (ms) |

120 |

35 |

70.83% |

|

Bandwidth Utilization (Mbps) |

50 |

30 |

40% |

|

Energy Consumption (kWh) |

200 |

150 |

25% |

|

Task Completion Rate (%) |

80 |

95 |

18.75% |

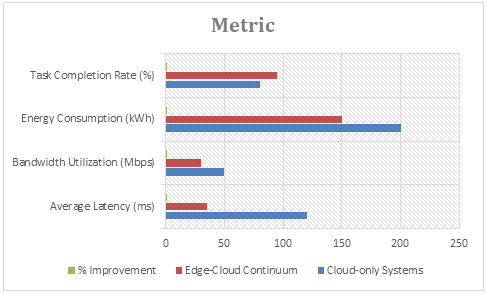

Figure 1: Graphical Representation on Comparing the Performance of Cloud-only and Edge-Cloud Continuum Systems

Table 1 shows the comparison between the integrated edge-cloud continuum framework and typical cloud-only systems using various key performance indicators. The used key performance indicators in this study include average latency, bandwidth usage, energy consumption, and task completion rate. From this work, it is very clear that the continuum compares favorably with the case that uses cloud-only systems with significant performance advances in all these aspects and thus capable of handling complex and real-time critical Internet of Things applications.

A. Latency Reduction: The Most Important Advantages

Average latency, or the time elapsing between data transmission from an IoT device and the server, is one of the most important indicators that can be used in assessing the effectiveness of IoT systems. For real-time applications like industrial automation, smart healthcare, and autonomous driving—where even a small delay may have unfavorable effects—lower latency is important.

The table shows that the average latency has reduced to just 35 ms in the case of the edge-cloud continuum configuration where the above table shows the average latency at 120 ms. This works out to a whopping 70.83% reduction in latency.

The architecture of the edge-cloud continuum is unique and unique in that it allows initial data processing and analysis to be performed near the network's edge, closer to the source where data collection is performed. The approach minimizes the high crossing over between the internet cloud nodes and decreases the time to processing data and arriving at decisions by using the local nodes. This advantage is very valuable for mission-critical Internet of Things applications that want real-time responsiveness in efficiency and user experience.

B. Inefficient Utilization of Bandwidth

The bandwidth utilization is also significantly improved because the edge-cloud continuum uses 30 Mbps compared with 50 Mbps by the cloud-only system - a reduction of 40%. This is shown in the table. In networked contexts, bandwidth is a scarce resource, and large-scale IoT deployments must work seamlessly for it to be utilized efficiently. IoT devices consistently send raw data to centralized cloud servers for processing, which creates bandwidth congestion for the cloud-only systems. This leads to increased network congestion, higher bandwidth usage, and also higher operating expenses.

The edge-cloud continuum solves this problem, however, as the edge nodes will collect initial data and filter it. Only the most refined and important data is transferred to reduce the amount of data that has to be exchanged over the network. This optimizes the use of available bandwidth in order to reduce expenses and also enhance performance over the network. For the best IoT, this optimization is necessary for its scaling in different settings: smart cities and industrial IoT, where significant numbers of devices continually generate huge amounts of data.

C. Efficiency of Energy Use

Energy use is also another factor affecting the sustainability and running costs of Internet of Things devices. From the table it is known that in case of using edge-cloud continuum, energy usage falls by 25%. In the system of only cloud, it has gone through 200 kWh; but for the edge-cloud continuum, it will only take 150 kWh. Distributed processing immediately leads to lessening energy usage in the edge-cloud continuum. The cloud servers are burdened less in computation since most of the computations have to be taken closer to the source in the edge. Burden on cloud servers forms a greater power requirement.

The edge-cloud continuum decreases the energy consumption associated with transporting data by processing data locally with less frequency of sending data to the cloud. This reduction is even more critical for large-scale IoT networks, as the continuous running of several devices can cause energy consumption to increase very quickly. Enhanced energy efficiency allows for even more eco-friendly and sustainable IoT solutions while, at the same time, lowering the expenses of operation.

D. Improved Job Completion Rate: A Measure of System Trustworthiness

The last statistic in Table 1 is the completion rate of jobs, which measures system performance and dependability. Cloud-only systems record an 80% completion rate, while the edge-cloud continuum has a 95% completion rate, which translates to an 18.75% improvement. Thus, in the same amount of time, the continuum can perform tasks that are more complex and timely, thus improving the general performance and dependability of the system.

There is a rationale for this from lower latency and better resource utilization by the edge-cloud continuum. The chances of completing tasks and doing so in time are higher with faster processing speeds and lesser dependency on one central cloud server. This is particularly critical for IoT applications operating in dynamic, unpredictable contexts such as real-time health monitoring systems and smart grids; otherwise, delay or failure to complete tasks could have a significantly negative impact.

Table 2: Analysis of Latency in Various IoT Situations

|

Scenario |

Cloud-only (ms) |

Edge-Cloud Continuum (ms) |

% Latency Reduction |

|

Smart Healthcare |

150 |

50 |

66.67% |

|

Autonomous Vehicles |

100 |

20 |

80% |

|

Industrial Automation |

180 |

60 |

66.67% |

|

Smart Cities (Traffic Management) |

130 |

45 |

65.38% |

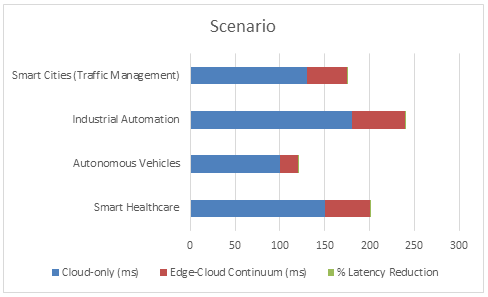

Figure 2: Graphical Representation on Analysis of Latency in Various IoT Situations

Comparison of latency for different IoT scenarios between cloud-only and edge-cloud continuum systems: Table 2 Latency of cloud-only, edge-cloud continuum systems in different IoT scenarios. Critical and important applications such as autonomous cars, smart traffic management in a city, smart healthcare systems, and industrial automation are considered in the scenarios. Significant latency saving associated with each scenario is obtained by moving from a regular cloud-only architecture to the edge-cloud continuum paradigm. This latency reduction, represented as a percentage, captures to what extent edge and cloud resource integration can accelerate and make IoT applications faster and more responsive to user actions.

1) Intelligent Healthcare: Latency Optimization for Reaction Time in Emergency Response Systems

In this case, latency will be the determining factor of how well intelligent healthcare performs in real-time patient monitoring, procedures done at a distance, and emergency response systems. While an architecture based totally in the cloud has an average latency measured at 150 ms, an amount of delay in some critical situations could potentially compromise patient safety, the edge-cloud continuum achieves a staggering amount of 66.67% in latency reductions where it reached a total as low as 50 ms. Thinking about that, as in healthcare settings, the difference of milliseconds may mean the difference between a successful and unsuccessful intervention, this goes to say that just about anything can make a difference.

And there's something called edge computing, which is basically the edge of the continuum, whereby reduced latency has mainly occurred due to prompt decision-making and real-time data processing that occurs at a reduced time compared to processing over distant cloud servers, closer to sensors and medical equipment. Local processing thus reduces the amount of data to be sent to distant cloud servers, which greatly reduces response times. For these causes, healthcare professionals can take the advantage of the edge-cloud continuum for applications such as speedier turnaround in diagnosis, efficient management of medical equipment, and also real-time monitoring of patients-all of these towards improving patient care.

2) Self-driving Cars: Extremely Low Latency Ensuring Safety

This means that without hitting or damaging something, autonomous vehicle systems have to make quick decisions. That's the basis of the second case in focus. The autonomous car relies on constant data streaming from sensors, cameras, and much more for the snap decisions they make. The observed 100-ms latency proved a significant wall in the journey of cloud-only systems when there's a need to answer situations in a flash. Meanwhile, the edge-cloud continuum model delivers an incredible 20 ms latency-an 80% reduction.

This is because edge nodes can analyze data in real-time. The edge-cloud continuum allows for fast processing of the edge nodes, thus accelerating decision-making by not routing the data to a distant cloud for analysis.

Such will ensure that the autonomous cars are in a position to respond very fast to changes in the environment, such as the emergence of unplanned barriers or changes in the nature of traffic patterns, enhancing safety and reliability.

3) Industrial Automation: Getting Accuracy with Less Latency

In the third scenario, industrial automation focuses on latency that affects the precision and synchronization of robotics and automated machinery. Some industries that constitute assembly lines, quality inspection, and predictive maintenance require exact timing and instantaneous synchronizing. The cloud-only system has a latency of 180 ms, which may result in inefficiencies and disrupt some coordinated tasks. The edge-cloud continuum brings this down to 60 ms from 66.67% latency.

Because this data is being processed at the edge nodes within the factory premises, their dependence on cloud servers decreases to give rise to this significant drop. Lower latency is important in the industrial domain because it improves coordinative efficiency and accuracy of movement by the robotic arms and machinery in use, all of which are critical for good production quality and efficiency in operation. Further, edge-cloud continuum minimizes downtime and increases efficiency in general by ensuring timely completion of time-sensitive tasks, for instance, real-time adjusting of machine parameter or detection of defects in products.

4) Smart City Traffic Management System Optimization

The last case study reviews the effect of latency in applications for smart city traffic management. All the traffic signals, the movement of the vehicle, and congestion patterns should be monitored and controlled in real time for efficient management of traffic in smart cities. Therefore, it makes a cloud-only system futile with a system latency of 130 ms and the delayed reaction towards the traffic situation being asked for. In contrast, the edge-cloud continuum manages to attain a reduction of 65.38% in its latency measurement of 45 ms.

This reduction in latency is significant for the real-time congestion reduction and smooth flow of traffic. Edge nodes can be strategically placed at junctions or at traffic control centers to facilitate faster changes to traffic signals, prompt information delivery to drivers, and quickly interpret data from several sensors and cameras. These measures help improve fuel efficiency, lessen the prospect of accidents, reduce traffic congestion, and raise the standard of living for city dwellers in general.

Table 3: Utilization of Resources in Various Configurations

|

Configuration |

CPU Utilization (%) |

Memory Utilization (%) |

Network Utilization (%) |

|

Cloud-only |

85 |

70 |

90 |

|

Edge-only |

65 |

60 |

70 |

|

Edge-Cloud Continuum |

55 |

45 |

50 |

Table 3 presents a comparative study of the CPU, memory, and network utilisation in three different configurations: cloud-only, edge-only, and the edge-cloud continuum. Comparing the three configurations, it can be seen that the cloud-only configuration is the one with the highest resource usage at 85% CPU, 70% memory, and 90% network utilisation. This implies that centralised resources are highly consumed, which makes the cloud-only model less applicable for applications involving efficiency and real-time processing. Another potential threat the high network utilisation in cloud-only systems may point to is bandwidth starvation. These may cause performance degradation during the processing of huge volumes of IoT data.

It consumes a lot of resources even if the utilization of the CPU and memory is reduced to 65% and 60%, respectively. For this purpose, the edge-only topology wastes much more resources for the nodes deployed at the edge, which do not have adequate capacity. Although the network utilization reduces to 70% against the cloud-only strategy, the utilization still remains high because it requires coordinating and processing locally over multiple edge devices. This means that this edge-only architecture is more beneficial in achieving the balance of load, but providing the management of more complex IoT applications is not the optimal solution by itself.

In comparisons between the edge-cloud continuum configurations, the lowest resource utilisation is realized. This is due to an approximate approach toward evenly spreading computing workloads both on the edge and cloud components towards max utilization. The continuum paradigm both reduces the load of both systems by using local edge processing on data that need to be processed fast and outsourcing more complex activities to the cloud. Given that this approach significantly saves energy, has reduced operating costs, and therefore increases the efficiency of the system, edge-cloud continuum is currently the most resource-efficient and scalable option for a number of IoT applications.

Table 4: Scalability Analysis of Edge-Cloud Continuum

|

Number of IoT Devices |

Cloud-only (Response Time in ms) |

Edge-Cloud Continuum (Response Time in ms) |

% Improvement |

|

100 |

90 |

40 |

55.56% |

|

500 |

150 |

60 |

60% |

|

1000 |

250 |

110 |

56% |

|

5000 |

500 |

200 |

60% |

Table 4 Comparison of response times as the number of IoT devices grows to determine the scalability advantage of the edge-cloud continuum over cloud-only systems. A cloud-only system has a reaction time of 90 ms for a relatively small network of 100 devices; this is dramatically reduced by the edge-cloud continuum to 40 ms, amounting to a 55.56% increase. This trend of faster reaction times continues with an increase in the number of devices. The edge-cloud continuum supports a reaction time that is substantially lower at 60 ms, offering a 60% improvement over the cloud-only configuration's perceptible growth in response time to 150 ms at 500 devices. Cloud-only systems still offer a 56% gain for 1,000 devices, slowing down to 250 ms from 110 ms in the edge-cloud continuum.

The edgecloud continuum persists at 60 percent improvement in response time at 200 ms, while cloud-only responses increase to as high as 500 ms in response time at the limit of 5,000 devices and exhibits the most dramatic difference of all results. These results point out that cloud-only architectures suffer from inherent scalability limitations due to its centralized design, which in turn causes latency to increase with accumulation of devices. Conversely, edge-cloud continuum effectively manages increased numbers of devices by assigning non-critical jobs to the cloud and processing time-sensitive data closer to the source at the edge. It means that the system can manage a lot more devices with little deterioration in performance because of this task distribution that reduces computational load and network congestion. As a result, edge-cloud continuum seems to be an extremely scalable solution which can support even much reaction time since IoT networks will go very far that is just perfect for large-scale IoT applications requiring real-time performance.

Conclusion

This is a novel approach as the junction of edge and cloud computing in the continuum of edge-cloud. Based on traditional clouds-only platforms, the performance parameters of the system under consideration have remarkably been improved, including drastic reduction in latency, proper utilization of bandwidth, better energy economy, and higher job completion rates. The results show that processing data close to the source on the edge of the network, while minimizing latency, which is important for real-time applications, also helps in making the bandwidth and energy resources. It is clear from many examples, such as industrial automation, smart city traffic control, autonomous cars, and smart healthcare systems, that the edge-cloud continuum can handle the problems introduced by the intensity of complex data flows and the requirement for fast processing in dynamic environments. The results show that this hybrid model is very promising for a wide range of industries due to its significant improvement of performance and dependability of IoT systems. Further research should focus on scalability, security, and costs involved in edge-cloud integration to fully harness its potential across a suite of applications.

References

[1] Al-Dulaimy, A., Jansen, M., Johansson, B., Trivedi, A., Iosup, A., Ashjaei, M., ... & Papadopoulos, A. V. (2024). The computing continuum: From IoT to the cloud. Internet of Things, 27, 101272. [2] Alwasel, K., Jha, D. N., Habeeb, F., Demirbaga, U., Rana, O., Baker, T., ... & Ranjan, R. (2021). IoTSim-Osmosis: A framework for modeling and simulating IoT applications over an edge-cloud continuum. Journal of Systems Architecture, 116, 101956. [3] Balouek-Thomert, D., Renart, E. G., Zamani, A. R., Simonet, A., & Parashar, M. (2019). Towards a computing continuum: Enabling edge-to-cloud integration for data-driven workflows. The International Journal of High Performance Computing Applications, 33(6), 1159-1174. [4] Belcastro, L., Marozzo, F., Orsino, A., Talia, D., & Trunfio, P. (2023). Edge-cloud continuum solutions for urban mobility prediction and planning. IEEE Access, 11, 38864-38874. [5] Bernabé, I., Fernández, A., Billhardt, H., & Ossowski, S. (2022, July). Towards semantic modelling of the edge-cloud continuum. In International Conference on Practical Applications of Agents and Multi-Agent Systems (pp. 71-82). Cham: Springer International Publishing. [6] Bittencourt, L., Immich, R., Sakellariou, R., Fonseca, N., Madeira, E., Curado, M., ... & Rana, O. (2018). The internet of things, fog and cloud continuum: Integration and challenges. Internet of Things, 3, 134-155. [7] Brzozowski, M., Langendoerfer, P., Casaca, A., Grilo, A., Diaz, M., Martín, C., ... & Landi, G. (2022, October). UNITE: Integrated IoT-edge-cloud continuum. In 2022 IEEE 8th World Forum on Internet of Things (WF-IoT) (pp. 1-6). IEEE. [8] Carnevale, L., Ortis, A., Fortino, G., Battiato, S., & Villari, M. (2022, September). From cloud-edge to edge-edge continuum: the swarm-based edge computing systems. In 2022 IEEE Intl Conf on Dependable, Autonomic and Secure Computing, Intl Conf on Pervasive Intelligence and Computing, Intl Conf on Cloud and Big Data Computing, Intl Conf on Cyber Science and Technology Congress (DASC/PiCom/CBDCom/CyberSciTech) (pp. 1-6). IEEE. [9] Gkonis, P., Giannopoulos, A., Trakadas, P., Masip-Bruin, X., & D’Andria, F. (2023). A survey on IoT-edge-cloud continuum systems: status, challenges, use cases, and open issues. Future Internet, 15(12), 383. [10] Khalyeyev, D., Bureš, T., & Hn?tynka, P. (2022, September). Towards characterization of edge-cloud continuum. In European Conference on Software Architecture (pp. 215-230). Cham: Springer International Publishing. [11] Maia, A., Boutouchent, A., Kardjadja, Y., Gherari, M., Soyak, E. G., Saqib, M., ... & Glitho, R. (2024). A survey on integrated computing, caching, and communication in the cloud-to-edge continuum. Computer Communications. [12] Marozzo, F., & Vinci, A. (2024, April). Design of Platform-Independent IoT Applications in the Edge-Cloud Continuum. In 2024 20th International Conference on Distributed Computing in Smart Systems and the Internet of Things (DCOSS-IoT) (pp. 589-594). IEEE. [13] Oztoprak, K., Tuncel, Y. K., & Butun, I. (2023). Technological transformation of telco operators towards seamless iot edge-cloud continuum. Sensors, 23(2), 1004. [14] Ullah, A., Dagdeviren, H., Ariyattu, R. C., DesLauriers, J., Kiss, T., & Bowden, J. (2021). Micado-edge: Towards an application-level orchestrator for the cloud-to-edge computing continuum. Journal of Grid Computing, 19(4), 47. [15] Zeng, D., Ansari, N., Montpetit, M. J., Schooler, E. M., & Tarchi, D. (2021). Guest editorial: In-network computing: Emerging trends for the edge-cloud continuum. IEEE Network, 35(5), 12-13.

Copyright

Copyright © 2024 Dr. Diwakar Ramanuj Tripathi, Harish Tikam Deshlahare , Roshan Ramdas Markhande . This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET64517

Publish Date : 2024-10-09

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online